Company Projects

Python, Google Cloud Compute Engine, Google Cloud SDK, Command line tools

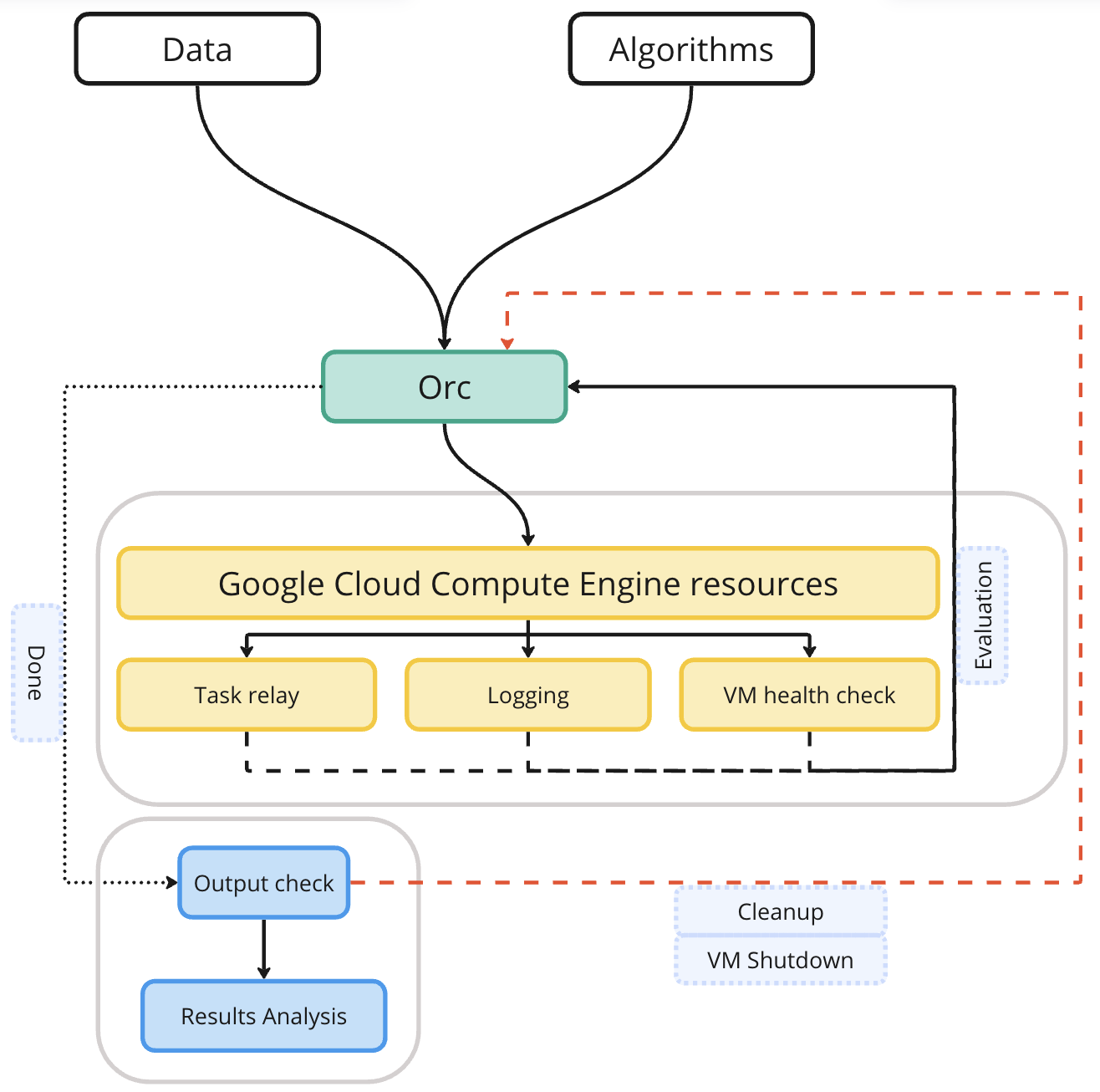

We faced limitations due to the runtime of our CausalAI pipeline, which led us to ideate the use of VMs on GC Compute Engine and refactoring of the codebase specific to causal analysis and related code blocks to facilitate simultaneous execution of multiple tasks. The refactoring, coding, testing and deployment of this project was under my ownership and a solo mission, the outcome being a success and reduction of runtime by more than 150 times. Where we would normally have spoken of weeks or months for large runs, we now spoke of hours.

The outcome is that single jobs will be submitted to single vCPUs on the VMs, results will be returned and prepared for the next part of the pipeline, again being submitted. The final results returned will be prepared by the computational team for inferences by our Biology team. Only the required amount of VMs for an even spread of jobs will be instantiated, the VM core saturation was constantly measured for the resubmission of remaining jobs. The user can just set up the config file, and execute some Python notebook cells. They can watch as the realtime logging information shows them which VM it was submitting how many jobs to, how many jobs were currently running on that VM, and how many jobs were yet to be submitted. In-run tests were conducted for validity of results for a job, and post-run tests for jobs that failed and failed to pick up.

The next step of development would have been to set up Pub/Sub messages that pick up on a change triggered in the VMs, which would have been read into the client-side code to trigger a job submission or result retrieval based on the message type received. While we recognised the potential benefits of implementing the steps forward described, we prioritised our resources to focus on initiatives that directly contribute to revenue generation.

You can view a basic depiction of the flow of the orchestrator in this modal image.

Python, Google Cloud Run, Google Cloud Artifact Registry, Docker, Google Cloud SDK, HTML, CSS, Plotly Dash, Cytoscape.js

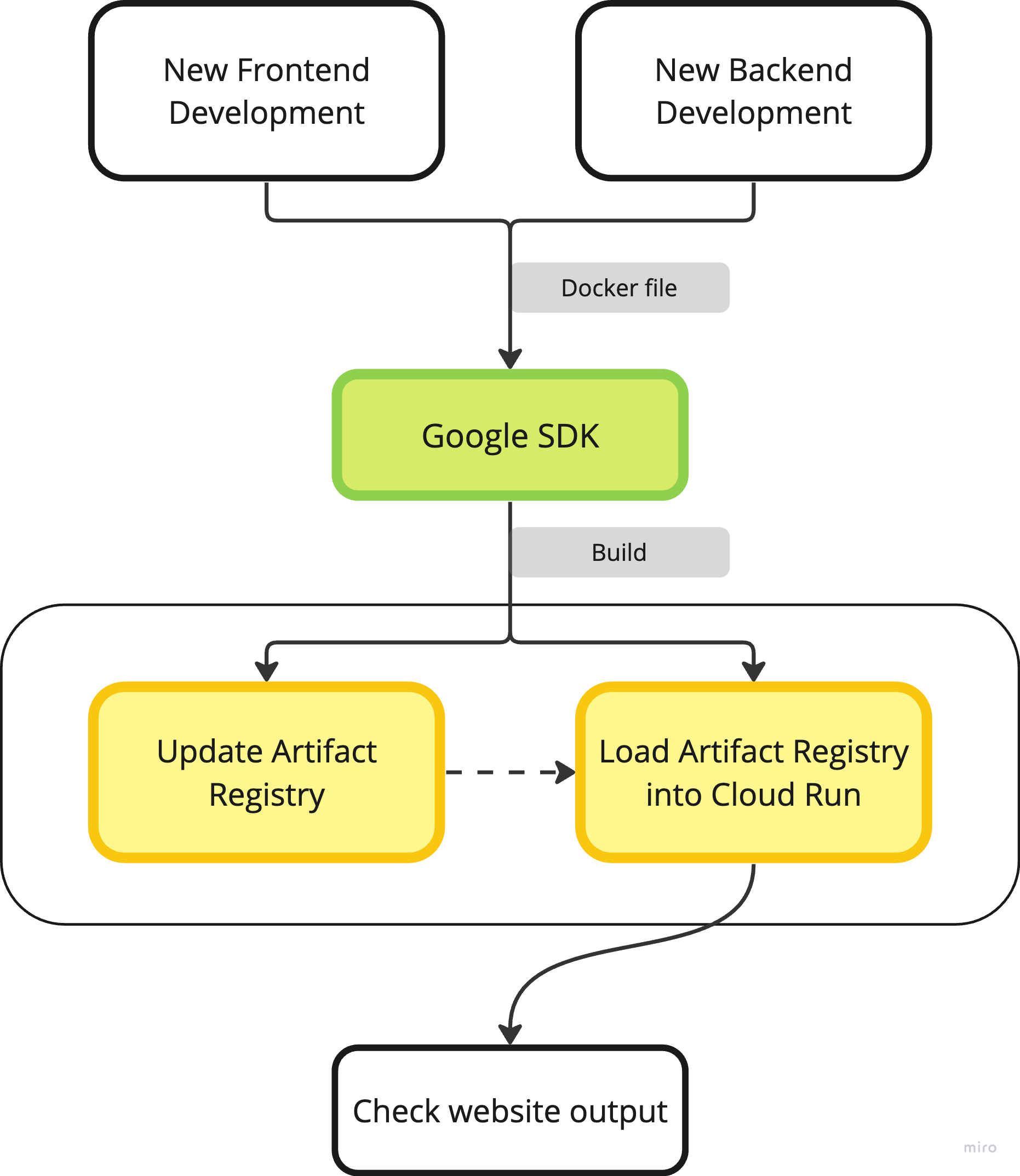

After getting causal inference results from our ALaSCA engine, we realised that sifting through the raw data would take too long, and manipulating the data for easy viewing won't be enough, since there will still be too much to look at. So, I embarked on another solo mission, crafting a web application showcasing causal inference findings across a spectrum of cancer types and treatment options. The web application's frontend was developed using the Plotly Dash Python library and the Dash plugin for Cytoscape.js, offering an intuitive and interactive interface for exploring complex causal relationships. Leveraging the results generated by our ALaSCA project, we seamlessly integrated data manipulation scripts into the application, enabling our biology team to visualize data in various formats, including Dash data tables, interactive Cytoscape graphics, and interactive Plotly graphs.

The deployment process involves packaging the application with Docker and pushing this package to GC Artifact Registry using GC SDK. Subsequently, the application was loaded into GC Run, leveraging the scalability and reliability of GC services. This approach not only ensured rapid deployment but also facilitated hassle-free scaling and management of the web application, allowing us to focus on delivering a seamless user experience and extracting actionable insights from complex causal inference data.

Next steps would have been to segregate the data from the web application, storing it in a GC Bucket instead, and adding a code segment to execute at the initialisation step of an instance of the website, which would transfer the latest version of the data from the bucket to the instance if the data has been updated since the last transfer. Data manipulation that can be separated from the application would be deployed to GC App Engine and "listen" to Pub/Sub messages for new files being uploaded to specific folders in the data storage bucket to trigger script runs depending on the message type.

You can view a client facing version of the website

here.

A visual representation of the deployment flow can be accessed via

this modal image.

Python, Google Cloud Compute Engine, Google Cloud Run, Docker, Google Cloud SDK, Google Cloud Serverless VPC Connectors, Streamlit, CSS

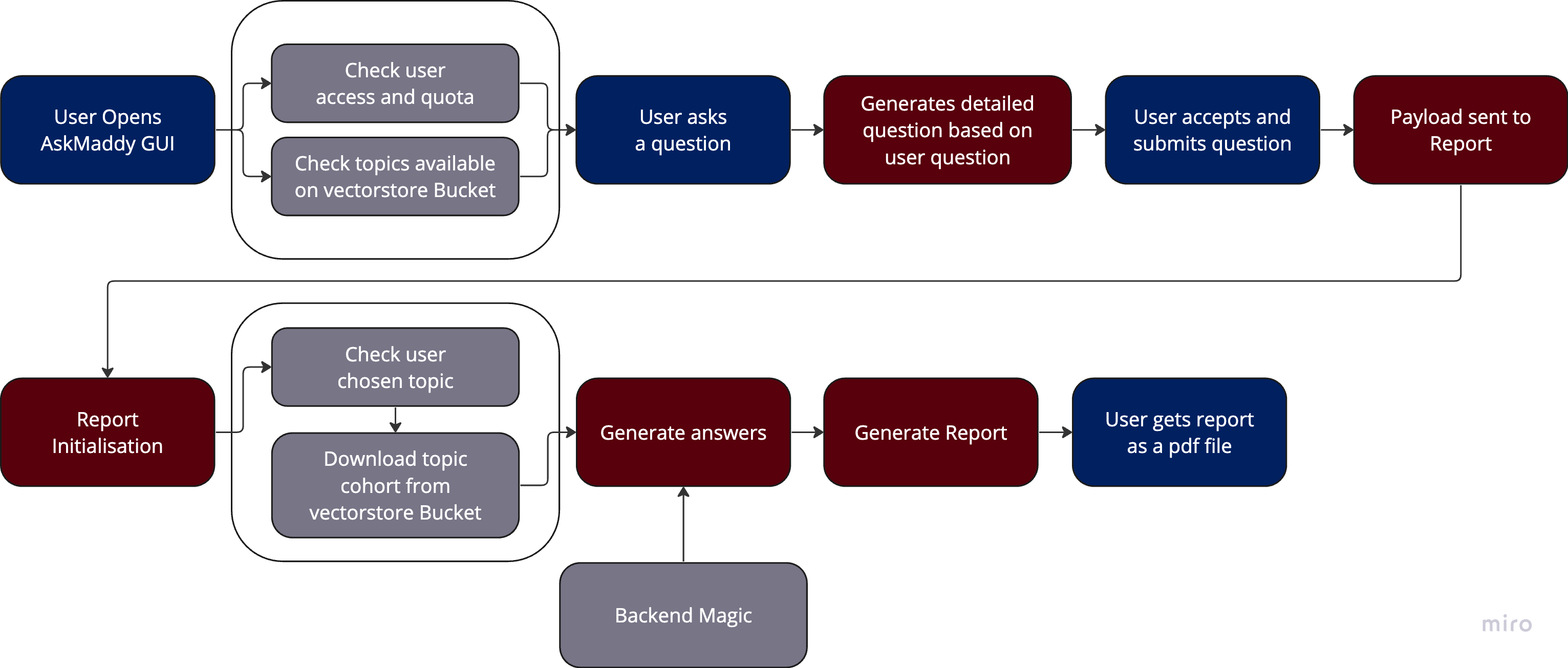

Our latest project involves the collaborative development of AskMaddy, a sophisticated RAG system built on an ensemble of LLMs (Large Language Models) designed to provide scientific answers to scientific questions. Leveraging vector stores of scientific papers as cohorts, AskMaddy empowers users to select specific topics, such as DNA Damage Repair, tailoring the system's search to the chosen cohort. The program is intricately divided into three key sections: Frontend, Backend, and Report. We'll be skipping the Backend description, since my fingers were not in the pie for that one

The Frontend part encompasses a Streamlit powered GUI, eligibility checks, and interactions with GC BigQuery tables to fetch vital information like user registrations and question quotas. It leverages the Google Python library for seamless uploads to buckets and integrates LangChain, amongst other libraries, for client-side language processing steps. It also incorporates some custom CSS injections for enhanced component styling, and session state variables for smooth operation.

The Report part automates the generation of comprehensive scientific answers to user queries, complete with inline references (multiple, since LLMs are stochastic by nature). This is followed by the generation of a detailed summary of the three answers. The answers, along with user questions, generated prompts, and user details is converted into a professionally formatted PDF report using the pdfkit Python library. The PDF generation process, including HTML template design and CSS styling, was meticulously developed solo (only my fingers in this pie), ensuring seamless integration and delivery of valuable insights to users via email.

A fourth section under development is keyword based scientific article DOI scraping, download and citation generation using the requests library of Python and APIs like Unpaywall and CrossRef. Apart from DOI scraping, this is a solo project. Most of the development has been completed, allowing for the fast download of open access papers relevant to a keyword, where the keyword represents a topic that a user will be able to select. The downloaded papers will get uploaded to a GC Bucket, triggering a Pub/Sub message, which in turn will initiate a GC App Engine instance that vectorises the papers and upload the vectorised papers into another GC Bucket. The contents of this Bucket will be uploaded to the Report section everytime a user requests a report through the deployed Frontend, ensuring an up to date cohort is available.

You can view the "dumbed down" thought process of the system in

this modal image.

Web Development

HTML, CSS

These projects were completed as part of the freeCodeCamp Responsive Web Design Certification. The projects are designed to showcase the ability to create responsive web pages using HTML and CSS. Certification specific projects (excluding other training projects) include a Tribute Page, a Survey Form, a Product Landing Page, a Technical Documentation Page, and a Personal Portfolio Webpage. The projects were completed in freeCodeCamp and can be viewed on my freeCodeCamp profile, which you can visit here.

JavaScript, CSS, HTML

These projects were completed as part of the freeCodeCamp JavaScript Algorithms and Data Structures (Beta) Certification. The projects are designed to showcase the ability to solve algorithmic problems using JavaScript. Certification specific projects (excluding training projects) include Palindrome Checker, Roman Numeral Converter, Caesars Cipher, Telephone Number Validator, Cash Register, and more. So far I've completed the first two certification projects, and am working on the rest. The projects were completed in freeCodeCamp, but can only be viewed once I finish this course. As such I implore you to look at the JavaScript for each one of my website pages to get an idea of how proficient I am.

JavaScript, HMTL, CSS

This website was created as a personal project to showcase my skills and projects. I created it using HTML, CSS, and JavaScript. The website is designed to be responsive, user-friendly, easy to navigate, with a clean and modern design. The website features a homepage, an about page, a testimonial page, and a portfolio page. It's hosted on Firebase and can be accessed at any time. The website is designed to provides information about my skills, projects, and experience. The website is constantly updated with new projects and information, so be sure to check back often for updates.

Python Development

Python

These projects were completed as part of the freeCodeCamp Scientific Computing with Python (Beta) Certification. The projects are designed to showcase the ability to solve scientific computing problems using Python. Certification specific projects (excluding training projects) include Arithmetic Formatter, Time Calculator, Budget App, Polygon Area Calculator, and Probability Calculator. The projects were completed in freeCodeCamp and can be viewed on my freeCodeCamp profile, which you can visit here.